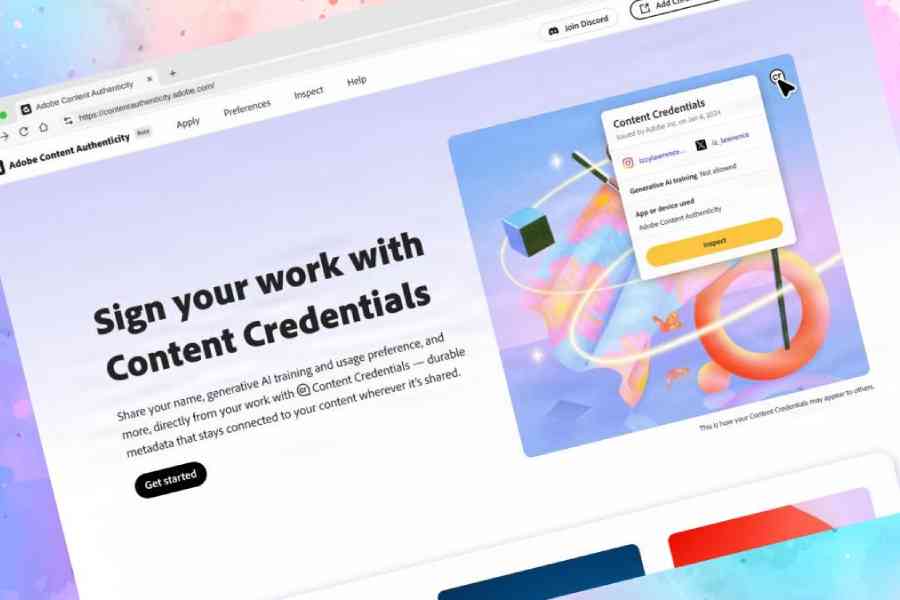

Adobe has unveiled the Adobe Content Authenticity web app, a free tool designed to mitigate the rise of AI-driven deepfakes, misinformation and content theft. The app allows users to apply Content Credentials or metadata that serves as a “nutrition label” for digital content to ensure one’s creations are protected from unauthorised use.

Coming in the first quarter of 2025 as public beta, it will require a free Adobe account but not an active subscription to any Adobe services. The app can be used to apply attribution data to content containing the creator’s name, website, social media pages, and more. It will also make it easier for creatives to opt their work out of AI training en mass compared to submitting individual protections for one’s content to each AI provider.

Content Credentials are tamper-evident metadata and the web app will integrate with Adobe’s Firefly AI models, alongside Photoshop, Lightroom, and other Creative Cloud apps that already support Content Credentials individually.

Besides giving artists credit for their work, Adobe’s credentials display an image’s editing history, including whether AI was used. The company is also working on a Google Chrome browser extension that will recognise credentials for content you come across online.

Adobe has confirmed that the labels will be accepted by Spawning, one of the biggest AI opt-out aggregators. Through its website called “Have I Been Trained?”, Spawning allows artists to search to see if their artworks are present in the most popular training datasets. Adobe’s technology uses a combination of digital fingerprinting, invisible watermarking and cryptographic metadata to securely attach credentials to content. So, when someone tries to take a screenshot of a photo, the credentials stay attached.

Artists are concerned about how their work is being used and there is increasingly misuse and misattribution of one’s work.