In early July, a ‘civil servant’ committed suicide in South Korea. The remains were discovered at the bottom of a stairwell at the Gumi City Council. There was speculation that a punishing work schedule, a common phenomenon in East Asian economies, may have led to the ‘official’ taking this extreme step.

South Korea has a significant — troubling — suicide rate among advanced economies. So, under ordinary circumstances, the news of yet another suicide should not have raised eyebrows. But it did — because the civil servant in question was not supposed to be a sentient being. It was a robot made by a California-based robot-waiter company that had been pressed into service. (South Korea, according to the International Federation of Robotics, employs one industrial robot for every 10 human employees.) What is remarkable is that ‘Robot Supervisor’ — it went by this nickname — much like its human counterparts, could sense that it was under stress. Some of the Council’s staff have gone on record to suggest that the industrial robot had been spotted behaving oddly — it kept circling at one spot — before it decided to snuff out the ‘life’ it possessed.

The conjecture about the robot’s perceived sentience, if it is true, would not be the stuff of fiction. In fact, the director of robotics and Artificial Intelligence at the Alan Turing Institute, who is also a chair professor of robotics at the University of Edinburgh, is of the firm opinion that robots would be capable of taking informed decisions within a decade which, when it does happen, would be a clear hallmark of their autonomy and individual agency. This imminent future will have tantalising — indeed revolutionary — implications for such diverse human realms as law, labour, philosophy, to name a few. It is possible that law and labour would then have to reflect on issues like the rights and the entitlements of machine co-workers. It is not inconceivable that the minders of law and labour rights would wrestle with ethical and also practical dilemmas about whether there should be specific work hours mandated for machines so as to prevent not just industrial robots but also digital appliances, including our round-the-clock working smartphones, from sharing the sorry fate of Mr Robot Supervisor.

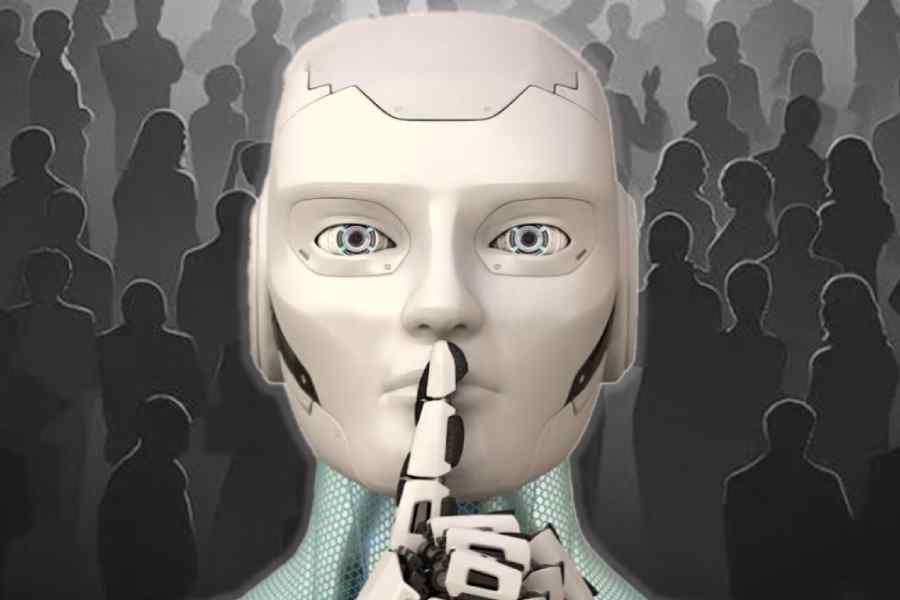

Public and policy discourses, it can be argued, are disproportionately focused on — enchanted with? — the evolving agency of mankind’s mechanical peers. So much so that the question regarding the lack of agency of a crucial segment of workers in the long and diverse supply chain that is involved in the creation of AI entities is disappearing through the cracks in our conscience. Given their invisibility to the public eye and their remoteness from corporate welfare policy, it would be apt to describe these workers integral to the emerging AI-centred production economy as the proverbial spirits — the ghosts in the AI machine. Most of them — underprivileged women, students, professionals and so on — are tasked with petty responsibilities that include labelling images for machine learning models, helping voice assistants comprehend reality by transcribing audio clips, red-flagging content laced with violence or misinformation on social media platforms and such like. Code Dependent, Madhumita Murgia’s excellent investigative book, which offers disturbing snippets of the lives that are shaping and, in turn, being shaped by AI, should stand tall among the literary and research projects examining the plight of AI’s invisible workers.

The Guardian’s review of Code Dependent got it right when it said that this is a “story of how the AI systems built using… data benefit many of us… at the expense of some — usually individuals and communities that are already marginalised”. The global communities that Murgia retrieves from the peripheries to the centre range from “[d]isplaced Syrian doctors train[ing] medical software…” to help “diagnose prostate cancer in Britain”, “[o]ut-of-work college graduates in recession-hit Venezuela” who tailor products for e-commerce sites as well as — not many would know this — “[i]mpoverished women in [Calcutta’s] Metiabruz” who label voice clips for Amazon’s Echo speaker.

Code Dependent’s author along with her peers, “researchers… mostly women of colour from outside the English-speaking West” (Mexico’s Paola Ricaurte, the Ethiopian researcher, Abeba Birhane, India’s Urvashi Aneja, and Milagros Miceli and Paz Peña from Latin America, among others), dismantle some of the fundamental myths that the echo chambers — social media, transnational corporations, techno-industrial behemoths, the media — complicit in varnishing AI’s credentials are propagating diligently. For instance, there is the claim of AI’s growing autonomous capacities rivalling human endeavour. But Murgia writes about “a badly kept secret about so-called artificial intelligence systems — that the technology does not ‘learn’ independently, and it needs humans, millions of them, to power it. Data workers are the invaluable human links in the global AI supply chain.” Then, there is the assertion of AI’s apparent potential to create innumerable new jobs. The World Economic Forum’s Future of Jobs report estimated that while 85 million jobs may be displaced by automation by 2025, 97 million new roles would emerge. But what needs to be questioned — interrogated — is the nature of these new jobs. Would they be as non-remunerative with punishing schedules as the ones that employ most of AI’s invisible army, especially the ones who occupy the lower tiers of the supply chain? To cite just one example, Murgia writes that at one outsourced facility in Kenya, workers were reportedly paid about $2.20 (£1.80) per hour for hours of work that involved sifting through imagery of human sacrifice, beheadings, hate speech and child

abuse.

There is also an entire set of ethical quandaries that cannot go unaddressed in this context. Murgia’s digging into the maze-like cosmos of low-end labour that is critical to the generation of AI products reveals the globalised dimensions of this sweatshop workforce. Its vast scale is not an accident: it is intended to be global by design. A dispersed, anonymous, disaggregated workforce is necessary for the neutering of its agency and awareness of rights. Code Dependent chronicles the voices of many a disgruntled, disillusioned worker in the AI chain: some of these voices have also been silenced.

The redemption of AI’s very own gig economy workers, Murgia argues, lies in the calibration of their collective agency. In fact, AI, the fruit of such impoverished labour, could play a redeeming role here. It has been argued that there is potential for the technology to be deployed to identify workers left alienated — disempowered — by the work process so that steps can be taken by the authorities to redress the problem.

If AI can emancipate at least some of its oppressed creators — the ghosts that make the machine — the technology would be deemed not human, like that robot in South Korea, but humane.

uddalak.mukherjee@abp.in