The music video of Kendrick Lamar’s song, “The Heart Part 5”, bewildered the audience as it featured the NBA player, the late Kobe Bryant. The video used deepfake technology to pay homage to the departed legend. Deepfakes are images and videos that are manipulated using advanced deep learning tools like autoencoders or generative adversarial networks.

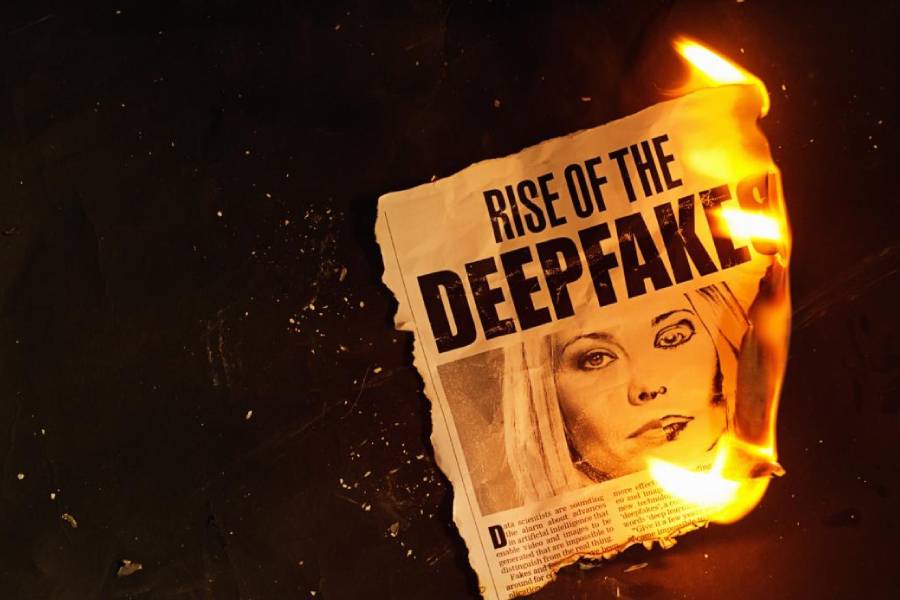

Realistic but manipulated media assets can be created effortlessly using deepfake technology. But deepfake technology is two-faced. While the technology has applications in film production, video games, and virtual reality, it has the potential of being harnessed as a weapon for the fifth dimension of warfare — cyberwar. Additionally, it can be used to generate fake news for propaganda politics and manipulation of the masses.

With the increasing penetration of the internet around the globe, cyber crimes are surging. According to a report by the National Crime Records Bureau, there were about 50,000 cases of cybercrime in 2020. The national capital witnessed a 111% increase in cybercrime in 2021 compared to 2020 as reported by NCRB. According to the data, most of these cases involved online fraud, online sexual harassment, and the publication of private content among others. Deepfake technology may cause a rise in such cases that are being weaponised for monetisation. Significantly, the technology not only threatens the right to privacy enshrined in Article 21 of the Constitution but is also instrumental in cases of humiliation, misinformation, and defamation. Deepfake voice phishing, whaling attacks, and scams targeting companies and individuals are thus likely to increase.

But this problem is not new. Image manipulation has been carried out since photography’s inception and subsequent evolution. Video/photo-editing software have been available for a while. Deepfake technology is, of course, a far more sophisticated challenge. Although it is possible to identify a deepfake with a deeper analysis, dedicated cybersecurity techniques are needed for the purpose. ChatGPT, the generative Artificial Intelligence which has been in the limelight recently, can resolve the challenges posed by deepfakes. ChatGPT can be integrated into search engines to provide effective solutions. The AI-enabled Natural Language Processing-based ChatGPT is trained to decline inappropriate requests to help deal with the spread of misinformation. It can also process complex algorithms to perform advanced reasoning tasks. The dataset has to be finetuned using supervised learning so that it can bring down such content on the internet within seconds of its deployment. Being open, it can be further customised to provide a faster, more convenient, solution that is also cost-effective. But the train set and the test set should be properly monitored to prevent AI from learning from existing deepfakes. To this end, there needs to be an increased inflow of experts in cyber security. Research and development, which currently stands at a mere 0.7% of India’s GDP compared to about 3.3% in developed countries like the United States of America, needs to be boosted. The National Cyber Security Policy 2013 must also be refined to adjust to new technologies and prevent the escalation of cybercrimes because such manipulations will get sophisticated with time.

At a time when a large arsenal of smart tools is being developed, it is important to know the principles on which they rely. This is a prerequisite for protecting institutions and citizens. AI has changed the dynamics of cybercrimes. This calls for an effort on the part of scientists and policymakers to deal with the emerging threats.