Dunlop: An app to recognise human emotions can help the mentally challenged, detect driver drowsiness and even catch a liar.

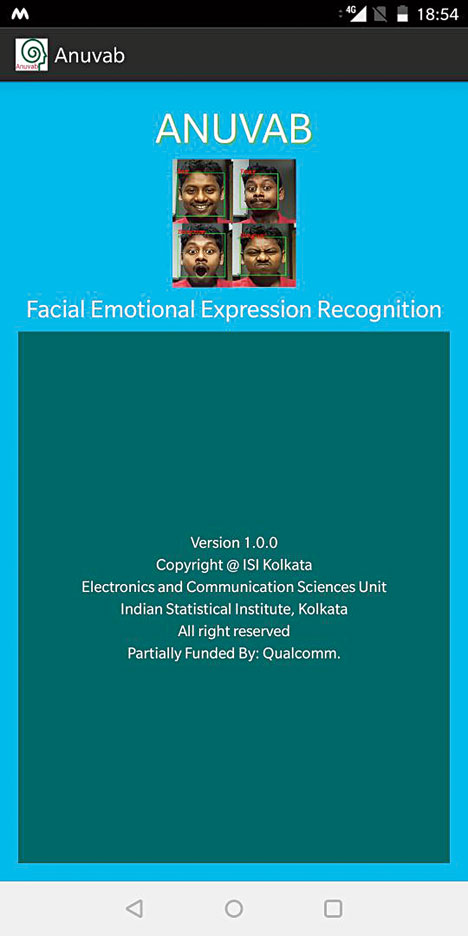

Anuvab, a facial emotion recognition app, designed by the students of the Indian Statistical Institute (ISI), Calcutta, can identify six basic emotions: joy, anger, surprise, disgust, fear and sadness.

The app, developed by PhD students of the Electronics and Communication Sciences Unit (ECSU) under the guidance of Dipti Prasad Mukhopadhyay, has won prizes at the Young IT Professional Award at the regional and national levels organised by the Computer Society of India.

The app recognises emotions through a three-step process - face detection, facial feature extraction and machine learning, where the machine is trained to classify the emotions.

"The machine does texture analysis in terms of finding Local Binary Patterns from the set of training image data," Rivu Chakraborty, a researcher at ECSU and part of the team that developed the app, said.

"There are key landmark areas in the face from which the software picks up features... we have developed an algorithm based on 94 landmark points to classify data - in this case the image of a face displaying emotions."

There can be various applications of such emotion readings in Human-Computer Interaction, medical sciences, criminology, consumer behaviour, TRP ratings, voter behaviour, and detection of driver drowsiness, among others.

The matter has been published in The Visual Computer 34(2) this year in an essay, "Anubhav: Recognising Emotions Through Facial Recognition", by Swapna Agarwal, Bikash Santra and Mukhopadhyay.

"Emotions control our decisions. When we go to a supermarket, we see a lot of things and our emotions are at work that influence our purchases; similarly advertisements on television and Internet, voter behaviour are all controlled by our emotions," Santra said.

Emotions are expressed through voice, gestures, body language and other channels. Facial expression is the most influential of these and it can be spontaneous or artificial.

Genuine emotions are spontaneously expressed on the face.

"At times, we display artificial expressions like a fake smile to greet someone," Swapna Agarwal said.

"We may also wish to hide our emotion. This is where lie detection comes into play. The feeling of emotions is decoded by the central part of the brain.

"The decoded signals then get transferred to motor neurons that display the facial expressions. This process takes around 0.1 to 0.5 seconds.

"The frontal lobe of the brain is the decision-maker. If after realising the emotion, the brain decides to hide the emotion from the external world, it sends signals to the motor neurons to control the expressions.

"But the facial expression that already got displayed on the face during this time are called micro expressions.

"These are hard to detect with the naked eye because of small time and minute magnitude. Videos can capture these subtle facial expressions. And so if we have an algorithm to detect these micro expressions from these videos, it can be used to detect lies and other things," she said.

Psychiatrists often find use of such algorithms and video images to analyse if a patient is depressed.

"The app can be found useful by schizophrenics to analyse and see for themselves how their fleeting moods change," Santra said.

While ISI is not into developing applications of the algorithms its students develop, there are industries that fund such researches to expand industry-academia interface.

"Qualcomm had collaborated with ISI, Calcutta, and funded them for the Automatic Recognition and Synthesis of Facial Expressions," Dipti Prasad Mukhopadhyay said. "Qualcomm gave us the initial fund and monitored our research in this field for a couple of years.

"They were interested in finding a way to detect driver drowsiness. Unfortunately, their research group in this area shifted out of this country. We then approached the Centre's department of science and technology, which supported a PhD fellowship to continue work in this project."

Taking forward her PhD work, Agarwal is now working towards creating Human-Computer Interaction systems that are emotionally intelligent.

The new machines will be able to understand human emotions and reciprocate likewise. "Emotional Intelligence (EI) is the more focused area of the much talked about Artificial Intelligence (AI)," she said.