Sound recognition

The important tool in the Accessibility section can benefit people who are hard of hearing. Your iPhone will be able to listen to 14 sounds, including door knock, sirens, dog barking, a crying baby and smoke alarm and notify you. Companies like Amazon have already used AI-based sound recognition as a safety measure to detect the sound of alarms or broken glass as part of Alexa Guard home security system. Let’s see how Apple develops the feature.

Back tap

It’s one of those wow features. You can double tap or triple tap the back of your iPhone to perform a custom task. This means you can link the tap to something like taking a screenshot or muting your phone. You can also use taps to activate shortcuts, which is very useful. Say you want to tweet or send a message, why not use a certain kind of tap to open a shortcut?! Pretty neat.

Stackable widgets

Many people are saying widgets are an Android thing. It’s not. First, the widgets look better than on Android. Second, the widgets are more in the mould of Windows phones. The widgets come in three different sizes and you can place them anywhere on the screen. The other thing to like about the Apple widgets is that you can stack them. There is something called Smart Stack, which intelligently stacks widgets one on top of another, depending on the time of the day or importance. It’s a clever use and makes it fun to go through the widgets.

Sign language

FaceTime will now be able to detect when someone is using sign language, and automatically make that person the focus, which makes the person signing more prominent in a video call.

Against tracking

Apps may track you even when you are not using it. How do you get to know about it on iOS 14? Apple user privacy software manager Katie Skinner said during the WWDC keynote that tracking should be “transparent” and “under your control”. In iOS 14, you’ll see a prompt when an app is trying to track you across other services. You’ll have the option to ‘Allow Tracking’ or ‘Ask App Not to Track’. Agreed it won’t stop inter-app tracking completely but it’s a step in the right direction.

Privacy on Safari

Safari is the best browser out there and the company already has taken a lot of steps against ad trackers. In macOS Big Sur, Safari will include a specific ‘Privacy Report’ to break down what specifically Safari is blocking and give you more insight into which trackers are popping up in your daily browsing. And now that more browser extensions will be supported, Safari will include more controls over privacy settings.

Hand and body pose

Starting in iOS 14 and macOS Big Sur, developers will be able to add the capability to detect human body and hand poses in photos and videos to their apps using the updated Vision framework. This will enable a wide variety of potential features on fitness apps or a media-editing app to find photos or videos based on pose.

Change your default browser and mail apps

It’s that one big feature which wasn’t talked about but it’s there. So far your iPhone has been using the built-in Mail and Safari apps as your default. Here comes a change. In iOS 14, you’ll have the ability to set default mail and browsing apps — and that includes third-party options. So when you click a web link, it will open to a browser of your choice. Frankly, Apple doesn’t have to worry about this because Safari remains the best.

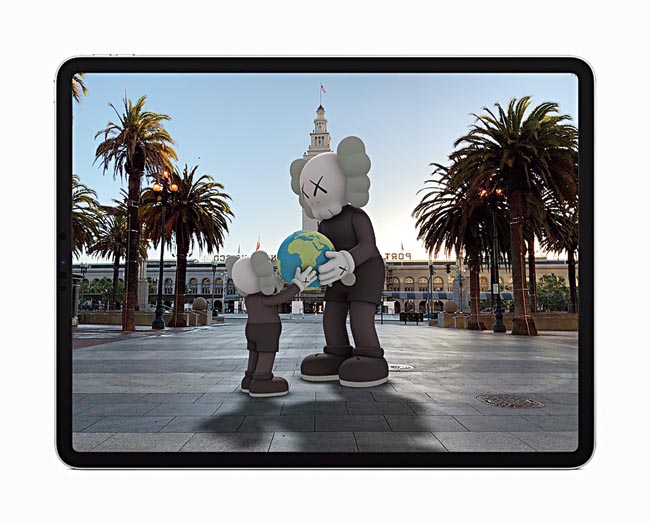

ARKit 4 introduces Location Anchors which allow developers to pin their AR experiences to a specific geographic coordinate — such as this KAWS sculpture which can be viewed through the Acute Art app Sourced by the Telegraph

ARKit 4

The latest version of ARKit introduces changes like the ability to place virtual objects and extend face-tracking for any phone powered by an A12 Bionic chip or later. Developers can use the Depth API to drive powerful new features in their apps, like taking body measurements for more accurate virtual try-on, or testing how paint colours will look before painting a room. ARKit 4 also introduces Location Anchors for iOS and iPadOS apps, which leverage the higher resolution data of the new map in Apple Maps, where available, to pin AR experiences to a specific point in the world.

No wonder there is a Lidar sensor on the new iPad Pro! If the next iPhone is to get a Lidar sensor, imagine the possibilities.

Will your device support it?

Supports iOS 14: iPhone 11, iPhone 11 Pro, iPhone 11 Pro Max, iPhone XS, iPhone XS Max, iPhone XR, iPhone X, iPhone 8, iPhone 8 Plus, iPhone 7, iPhone 7 Plus, iPhone 6s, iPhone 6s Plus, iPhone SE (1st generation), iPhone SE (2nd generation), iPod touch (7th generation)

Supports iPadOS 14: iPad Pro 12.9-inch (4th generation), iPad Pro 11-inch (2nd generation), iPad Pro 12.9-inch (3rd generation), iPad Pro 11-inch (1st generation), iPad Pro 12.9-inch (2nd generation), iPad Pro 12.9-inch (1st generation), iPad Pro 10.5-inch, iPad Pro 9.7-inch, iPad (7th generation), iPad (6th generation), iPad (5th generation), iPad mini (5th generation), iPad mini 4, iPad Air (3rd generation), iPad Air 2 watchOS 7: Apple Watch Series 3, Apple Watch Series 4