Hod Lipson, a mechanical engineer who directs the Creative Machines Lab at Columbia University, US, has shaped most of his career around what some people in his industry have called the c-word.

On a sunny morning this past October, the Israeli-born roboticist sat behind a table in his lab and explained himself. “This topic was taboo,” he said. “We were almost forbidden from talking about it — ‘Don’t talk about the c-word; you won’t get tenure’ — so in the beginning I had to disguise it, like it was something else.”

That was back in the early 2000s, when Lipson was an assistant professor at Cornell University, US. He was working to create machines that could note when something was wrong with their own hardware and then change their behaviour to compensate for that impairment without the guiding hand of a programmer.

This sort of built-in adaptability, Lipson argued, would become more important as we became more reliant on machines. Robots were being used for surgical procedures, food manufacturing and transportation; the applications for machines seemed pretty much endless, and any error in their functioning, as they became more integrated with our lives, could spell disaster. “We’re literally going to surrender our life to a robot,” he said. “You want these machines to be resilient.”

One way to do this was to take inspiration from nature. Animals, and particularly humans, are good at adapting to changes. Lipson wondered whether he could replicate this kind of natural selection in his code, creating a generalisable form of intelligence that could learn about its body and function no matter what that body looked like, and no matter what that function was.

Lipson earned tenure, and his reputation grew. So, over the past couple of years, he began to articulate his fundamental motivation for doing all this work. He began to say the c-word out loud: he wants to create conscious robots.

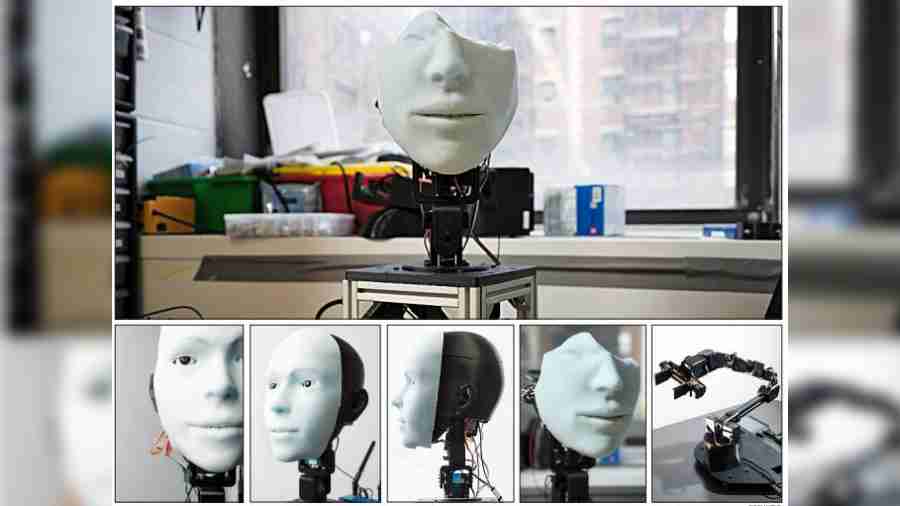

The Creative Machines Lab, on the first floor of Columbia’s Seeley W. Mudd Building, is organised into boxes. The room itself is a box, broken into boxy workstations lined with boxed cubbies. Within this order, robots and pieces of robots are strewn about.

The first difficulty with studying the c-word is that there is no consensus around what it actually refers to.

Trying to render the squishy c-word using tractable inputs and functions is a difficult, if not impossible, task. Most roboticists and engineers tend to skip the philosophy and form their own functional definitions. Thomas Sheridan, a professor emeritus of mechanical engineering at the Massachusetts Institute of Technology, said that he believed consciousness could be reduced to a certain process and that the more we find out about the brain, the less fuzzy the concept will seem.

Lipson and the members of the Creative Machines Lab fall into this tradition. He settled on a practical criterion for consciousness: the ability to imagine yourself in the future.

The benefit of taking a stand on a functional theory of consciousness is that it allows for technological advancement.

One of the earliest selfaware robots to emerge from the Creative Machines Lab had four hinged legs and a black body with sensors attached at different points. By moving around and noting how the information entering its sensors changed, the robot created a stick figure simulation of itself. As the robot continued to move around, it used a machine-learning algorithm to improve the fit between its self-model and its actual body. The robot used this self-image to figure out, in simulation, a method of moving forward. Then it applied this method to its body; it had figured out how to walk without being shown how to walk.

This represented a major step forward, said Boyuan Chen, a roboticist at Duke University who worked in the Creative Machines Lab. “In my previous experience, whenever you trained a robot to do a new capability, you always saw a human on the side,” he said.

Recently, Chen and Lipson published a paper in the journal Science Robotics that revealed their newest self-aware machine, a simple two-jointed arm that was fixed to a table. Using cameras set up around it, the robot observed itself as it moved. Initially, it had no sense of where it was in space, but over the course of a couple of hours, with the help of a powerful deep-learning algorithm and a probability model, it was able to pick itself out in the world.

The risk of committing to any theory of consciousness is that doing so opens up the possibility of criticism. Sure, self-awareness seems important, but aren’t there other key features of consciousness? Can we call something conscious if it doesn’t feel conscious to us?

Antonio Chella, a roboticist at the University of Palermo in Italy, believes that consciousness can’t exist without language and has been developing robots that can form internal monologues, reasoning to themselves and reflecting on the things they see around them. One of his robots was recently able to recognise itself in a mirror, passing what is probably the most famous test of animal self-consciousness.

Joshua Bongard, a roboticist at the University of Vermont, US, and a former member of the Creative Machines Lab, believes that consciousness doesn’t just consist of cognition and mental activity, but has an essentially bodily aspect.

New York Times News Service