Simon Mackenzie, a security officer at the discount retailer QD Stores outside London, was short of breath. He had just chased after three shoplifters who had taken off with packages of laundry soap. Before the police arrived, he sat at a backroom desk to do something important: capture the culprits’ faces.

On an ageing desktop computer, he pulled up security camera footage, pausing to zoom in and save a photo of each thief. He then logged in to a facial recognition program, Facewatch, which his store uses to identify shoplifters. The next time those people enter any shop within a few miles that uses Facewatch, store staff will receive an alert.

“It’s like having somebody with you saying, ‘That person you bagged last week just came back in,’” Mackenzie said.

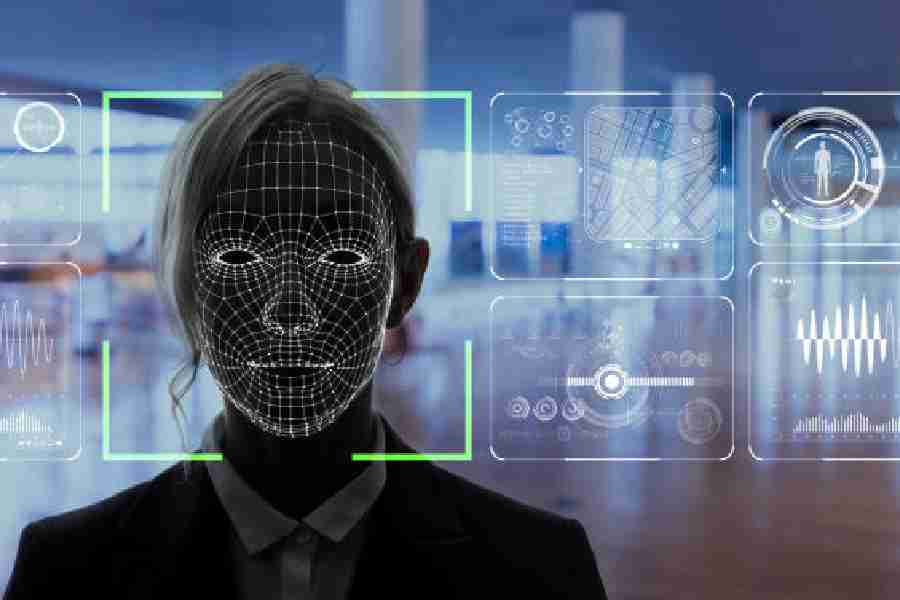

Use of facial recognition technology by police has been heavily scrutinised in recent years, but its application by private businesses has received less attention. Now, as the technology improves and its cost falls, the systems are reaching further into people’s lives. It is increasingly being deployed to identify shoplifters, problematic customers and legal adversaries.

Facewatch, a British company, is used by retailers frustrated by petty crime. When Facewatch spots a flagged face, an alert is sent to a smartphone at the shop.

Facial recognition technology is proliferating as Western countries grapple with advances brought on by AI. The European Union is drafting rules that would ban many of facial recognition’s uses, while Eric Adams, the mayor of New York City, US, has encouraged retailers to try the technology to fight crime.

But the use by retailers has drawn criticism as a disproportionate solution for minor crimes. Individuals have little way of knowing they are on the watchlist or how to appeal. Big Brother Watch, a civil society group, called it “Orwellian in the extreme”.

Facewatch, which licenses facial recognition software made by Real Networks and Amazon, is now inside nearly 400 stores across Britain. Trained on millions of pictures and videos, the systems read the biometric information of a face as the person walks into a shop and check it against a database of flagged people.

In October, a woman buying milk in a supermarket in Bristol, England, was confronted by an employee and ordered to leave. She was told that Facewatch had flagged her as a barred shoplifter.

The woman, whose story was corroborated by materials provided by her lawyer and Facewatch, said there must have been a mistake. When she contacted Facewatch a few days later, the company apologised, saying it was a case of mistaken identity.

After the woman threatened legal action, Facewatch dug into its records. It found that she had been added to the watchlist because of an incident 10 months ago involving £20 worth of merchandise. The system “worked perfectly,” Facewatch said.

But while the technology had correctly identified the woman, it did not leave much room for human discretion. The woman said she did not recall the incident and had never shoplifted. She said she may have walked out after not realising that her debit card payment failed to go through at a self-checkout kiosk.

Madeleine Stone of Big Brother Watch said Facewatch was “normalising airport-style security checks for everyday activities like buying a pint of milk.”

Civil liberties groups have raised concerns about Facewatch and suggested that its deployment to prevent petty crime might be illegal under British privacy law, which requires that biometric technologies have a “substantial public interest.”

The UK Information Commissioner’s Office conducted a yearlong investigation into Facewatch. The office concluded in March that Facewatch’s system was permissible under the law, but only after the company made changes to how it operated.

NYTNS