The National Assessment and Accreditation Council was started in 1994 in response to the call by the National Policy on Education (1986) to “address the issues of deterioration in the quality of education”, and the Programme of Action of 1992. The vision of NAAC is “to make quality the defining element of higher education in India through a combination of self and external quality evaluation, promotion and sustenance initiatives” in order to do which it set itself the mission to “arrange for periodic assessment and accreditation of institutions of higher education…; to stimulate the academic environment for promotion of quality teaching-learning and research...; to encourage self-evaluation, accountability, autonomy and innovations in higher education;... and to collaborate with other stakeholders... for quality evaluation, promotion and sustenance.”

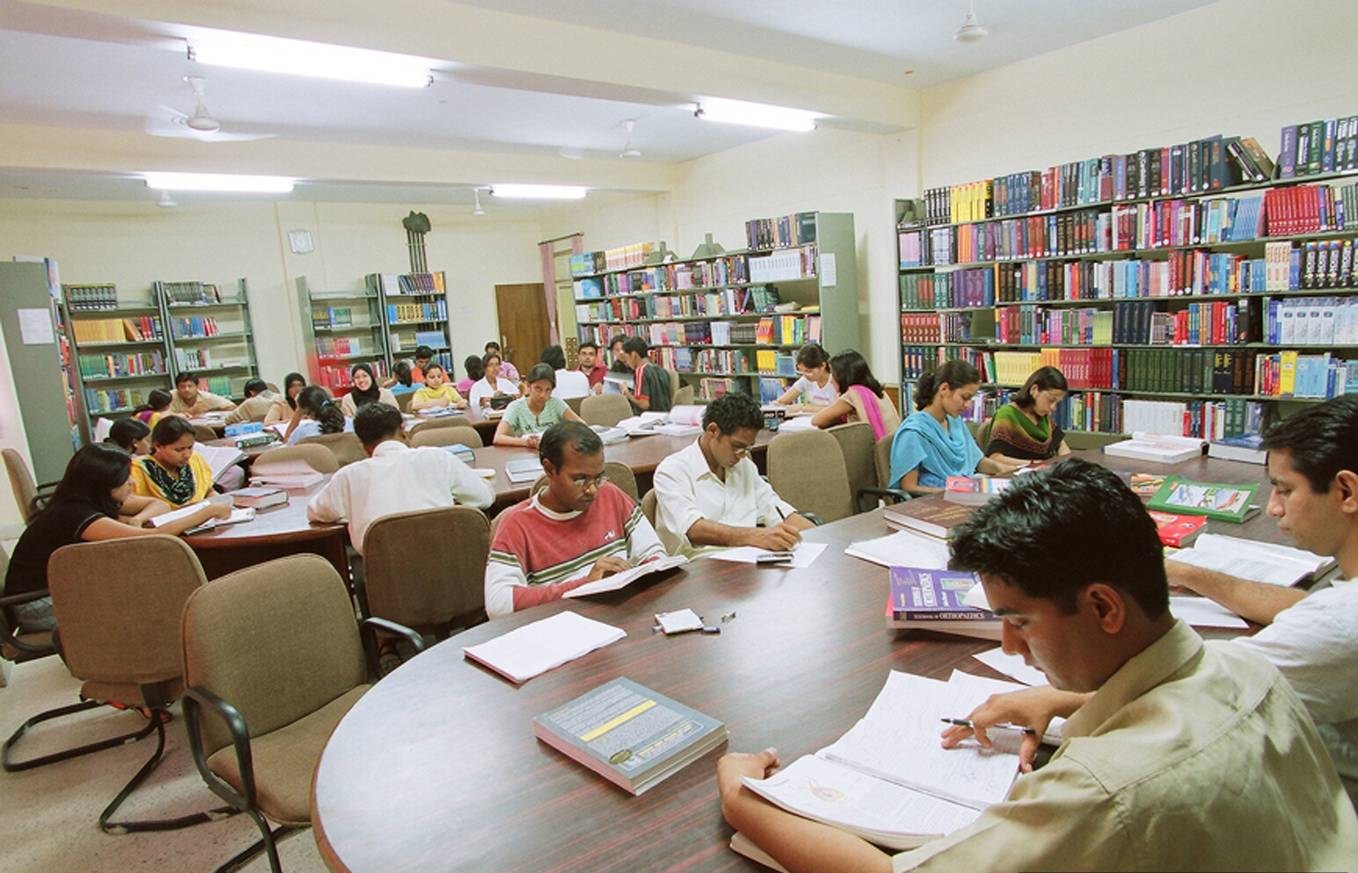

Higher education institutions have by now become used to the five-yearly assessment by NAAC, conducted on the basis of a self-assessment report furnished by the institution which is then verified through means of a visit by peers (typically senior academics with the requisite experience and expertise) to the institution in question. At the end of the assessment by the peer team, an institution is assigned a grade — comprising letters (‘A’, ‘B’ and so on, with or without plus signs added) or, as is the case now, a score on a four-point scale. There are seven broad areas which are examined and assessed by NAAC, ranging from curricular aspects to research, innovation and extension; to governance, leadership and management; to institutional values and best practices and so on. The idea is to get a holistic view of an institution of higher learning and look at ways in which it is contributing to national development, preparing students for an increasingly globalized world, inculcating positive values: all of which, ultimately, will foster a culture of excellence in campuses across the nation. The NAAC assessment process takes into account the disparities that exist in institutions of higher education, so that, for example, a university located in a rural area with students from predominantly tribal and backward communities is assessed differently from one located in a large metropolis with relatively affluent and educated students vying for admission to its courses.

Most academics and academic administrators have come round to the view that carrying out a NAAC-mandated SWOC (Strengths, Weaknesses, Opportunities, and Challenges) analysis of their institutions is, in the final analysis, a good procedure, one which, moreover, helps institutions to make the necessary course corrections so that they can deliver the benefits of education more efficiently, more equitably, with greater transparency, and with the involvement of all stakeholders. Two things need to be noted here. First, the gap between two assessments — typically five years — allows institutions to carry out reforms in a manner best suited to their own internal dynamics. Second, since NAAC is a continuous process, no ranks are awarded to institutions. Of course, some issues still remain. Quantifying publication and citation data has tended to favour STEM (science, technology, engineering, mathematics) disciplines and created complications for quantifying the quality of research outputs in the humanities and social sciences. Assessing outcomes for outreach activities (for example, in slums or among rural communities) is difficult at the best of times since these are — by their very nature — difficult to quantify.

Despite all this, NAAC has had a beneficial effect on India’s higher education. But things changed dramatically with the entry of the National Institutional Ranking Framework in 2015, which, as its name announces, is concerned with ranks, not just scores, and which, moreover, is an annual exercise, with few of the checks and balances of NAAC. The NIRF institutionalizes the belief that competition is beneficial and leads to overall improvement, an assumption that might be valid for sports but seems highly unlikely for institutions of higher education in a country as bewilderingly diverse as ours. Most crucially, such an annual exercise — involving thousands of institutions — cannot possibly carry out the verifications that ought to be the cornerstone of any process of evaluation of large, often unwieldy, and indubitably complex, entities. To overcome this, the NIRF has made the submission of accurate data the responsibility of the institute and asked institutes to upload submitted data on their websites and keep them there for three years in the interests of transparency. It has also threatened any institution found manipulating data with removal from the NIRF list and future ranking.

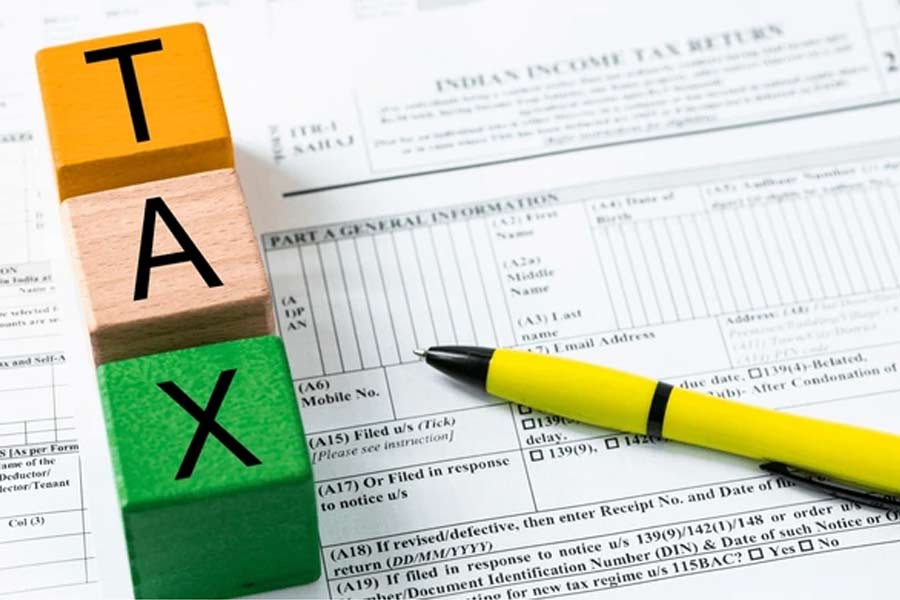

But, perhaps not surprisingly, institutions have not always been above tweaking data to get favourable scores and move up the ranking ladder. To take just one example, this year’s NIRF ranking places the University of Calcutta at fifth spot among all universities in India, and makes it the top-ranked state university in the country, a matter of pride for all of us who are denizens of this city. Yet, as newspaper reports have pointed out, there seem to be strange discrepancies between the figures the university furnished to the NIRF in earlier years and the same data submitted for this year’s ranking. To take just a couple of examples, according to the documents available on the NIRF website, the number of male students in the three-year undergraduate programme in Calcutta University seems to have fallen precipitously from 1,644 in 2015-16 to a mere 28 in 2017-18, while the number of female students has shrunk from 1,453 to 1,417 in the same period. Even more curiously, the median annual salary earned by students after completing their three-year undergraduate degree in 2015-16 was stated to be Rs 4,00,000 in the NIRF report for 2017, but the figure for the same graduating year (2015-16), as stated in the 2019 NIRF report, is given as Rs 8,04,400. Since none of the university’s NIRF reports is available on its website, such figures cannot be double-checked. Quite obviously, there is something amiss with the data published by the NIRF on one of the three oldest universities in the country, and, very likely, there was no cross-checking of the submitted data to remove anomalies and inadvertent errors.

The mad scramble for ranks and the concomitant economizing with truth that the NIRF has unleashed seem to be tied to the devaluation of the idea of a university in our country that has accelerated under our present ruling dispensation. Universities are being seen, more and more, as training institutes designed to impart useful, practical skills, not knowledge. While it may be true that a university does create knowledge and perhaps even wisdom, the first step towards such knowledge creation has to begin by challenging given knowledges, by questioning and overturning existing ways of thinking and doing. This is something that only universities can do, and they can do so if and only if they are built around a set of core values that are found in so-called ‘impractical’ and ‘un-utilitarian’ disciplines. Our leaders do not seem to want real universities, and they do not want real universities because they already know what is right and wrong, good and bad, proper and improper, and so on, for all of us. They certainly do not want universities to challenge or break or make redundant any kinds of knowledge, especially the knowledges they want us to internalize and disseminate. Which is why they want ordinary academics, such as your writer, to spend their time tweaking data to run up high scores and rise in rankings, rather than doing what they are mandated to do — show younger people how to think critically, both for themselves and for others around them. That, rather than data fudging, is the real tragedy of the NIRF.