Tech companies rarely think of accessibility features for those with low vision, people who can’t cope with high animation speeds or those who are slowly losing their voices. Apple has been an exception to the trend as, year after year, the company has been adding accessibility features. Ahead of Global Accessibility Awareness Day on May 18, the company showcased some major updates that are coming later this year. Those with cognitive disabilities can use iPhone and iPad with greater ease and independence with Assistive Access; nonspeaking individuals can type to speak during calls and conversations with Live Speech; and those at risk of losing their ability to speak can use Personal Voice to create a synthesised voice that sounds like them.

Personal Voice

There are people who can no longer speak with clarity and some lose confidence with age. These are problems most people will not understand. Apple has a feature for them — Personal Voice. Later this year, the company will roll out the feature and it allows users to type out what they want to say on an iPhone and hear — something like their voice — speak it aloud.

Personal Voice will let iPhones and iPads generate digital reproductions of a user’s voice for both in-person conversations as well as on phone, FaceTime and audio calls. It’s designed for those at risk of losing their ability to speak, such as those with a recent diagnosis of amyotrophic lateral sclerosis (ALS) or other conditions that can progressively impact speaking ability.

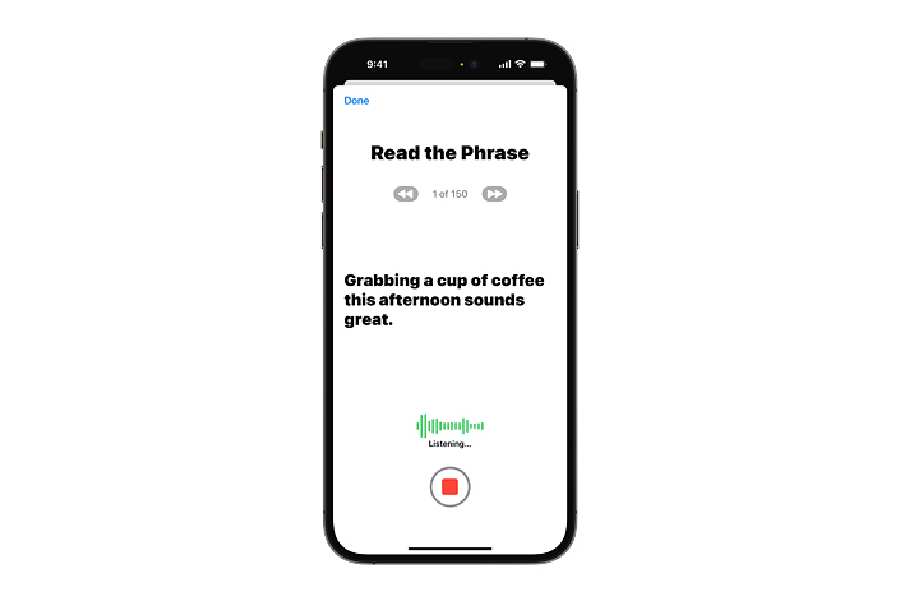

The process of using the feature appears simple but the technology behind it is obviously complicated. Users can create their Personal Voice by recording 15 minutes of audio on their device. It uses on-device machine learning to protect information and keep it secure. It integrates with Live Speech so users can speak with their Personal Voice when connecting with loved ones.

“At the end of the day, the most important thing is being able to communicate with friends and family. If you can tell them you love them, in a voice that sounds like you, it makes all the difference in the world — and being able to create your synthetic voice on your iPhone in just 15 minutes is extraordinary,” said Philip Green, board member and ALS advocate at the Team Gleason nonprofit, who has experienced significant changes to his voice since receiving his ALS diagnosis in 2018.

While offering voice samples during a 15-minute process, a set of randomly chosen voice prompts are offered and if the person wants to finish the process in multiple sittings, that is also possible. All the computing is done on the device.

Live Speech

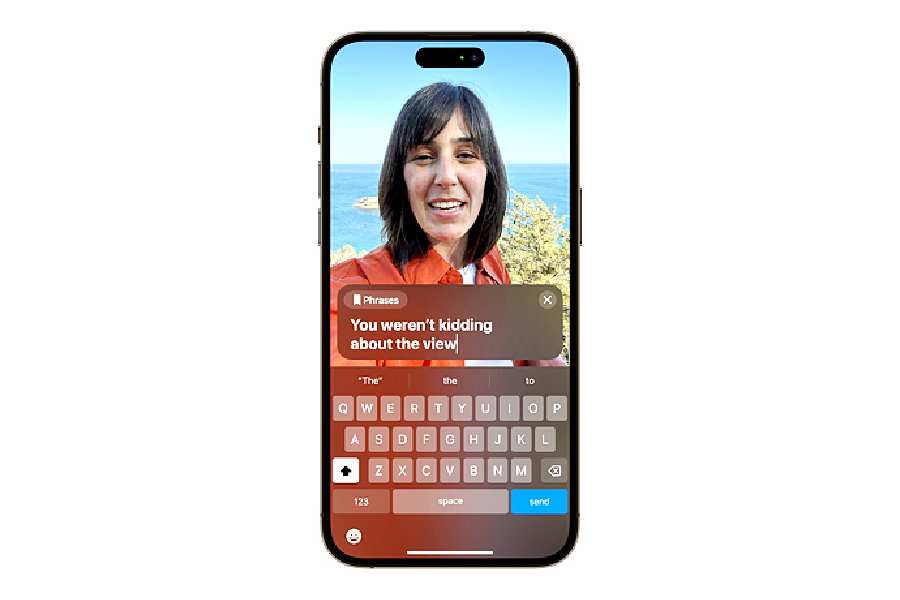

The feature is, in a way, connected with Personal Voice. When you enable the feature, you will be able to key in messages on an iPhone, iPad or Mac and then hear the device read it aloud. Some people may use certain phrases frequently; these can be saved as shortcuts to play aloud with a tap.

If Personal Voice is not enabled then Siri’s voice will read out your sentences. The feature also allows to feed into phone and FaceTime calls.

Personal Voice allows users at risk of losing their ability to speak to create a voice that sounds like them, and integrates seamlessly with Live Speech so users can speak with their Personal Voice when connecting with loved ones

Live Speech on iPhone, iPad, and Mac gives users the ability to type what they want to say and have it be spoken out loud during phone and FaceTime calls, as well as inperson conversations

Made for iPhone hearing devices can be paired directly to Mac and be customised to the user’s hearing comfort

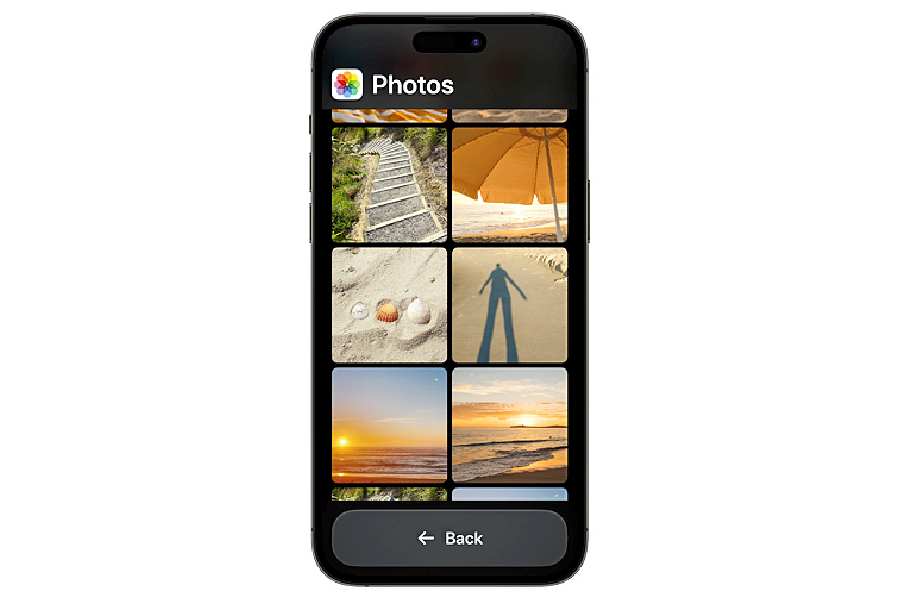

Assistive Access distils experiences across the Camera, Photos, Music, Calls, and Messages apps on iPhone to their essential features in order to lighten their cognitive load for users

Assistive Access

The feature will support users with cognitive disabilities. It has been designed to “distil apps and experiences to their essential features in order to lighten cognitive load”. Assistive Access includes a customised experience for Phone and FaceTime, which have been combined into a single Calls app, as well as Messages, Camera, Photos, and Music. It will have a distinct interface with high-contrast buttons and large text labels, as well as tools to help trusted supporters tailor the experience for the individual they support. Apple has given an example for its usage: For users who prefer communicating visually, Messages includes an emoji-only keyboard and the option to record a video message to share with loved ones.

Point and Speak

Magnifier is a tool to help people who have low vision. There is a new detection mode in Magnifier designed to help users “interact with physical objects with numerous text labels”. For example, a user can point the iPhone or iPad camera at a label on a microwave keypad and the device will read aloud as the user moves their finger across each number or setting on the appliance. Point and Speak combines input from the camera, the LiDAR Scanner, and on-device machine learning to announce the text.