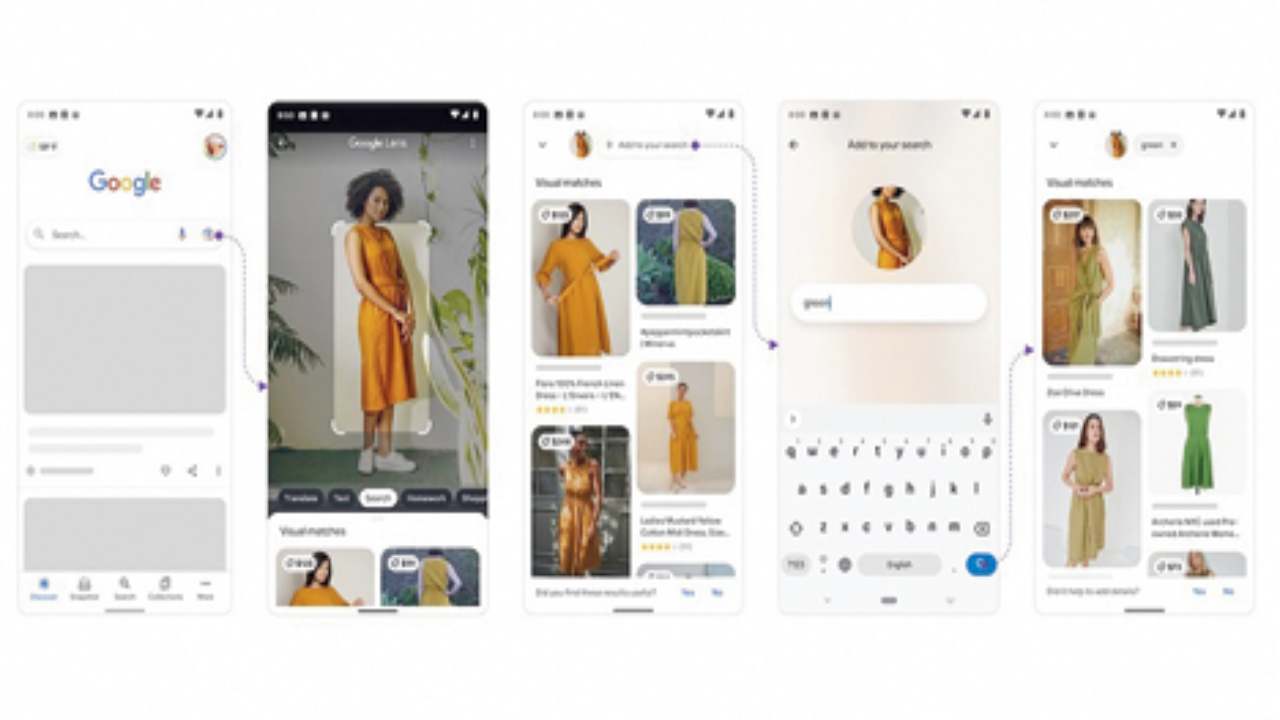

Among the many interesting announcements at Google’s Search-centric keynote in September was an upgrade to Lens, the company’s image recognition technology. Google has announced the roll out — in the US for the time being and as a beta feature — of a new “multisearch” feature which will allow users to search using text and images at the same time using Google Lens.

An example: Instead of typing into a search box, take an image with Google lens. In case you are looking for a green dress, take a picture of it in a different colour or length, or spot a pattern you like on a shirt and see it getting matched with similar items. Or find drapes that have the pattern as on your notebook. These are difficult things to search for with words. Or, you have a new plant but don’t know how to take care of it. Take a picture and add the text “care instructions” in your search to learn more about it. So, you are also combining visuals and text here.

“Using text and images at the same time. With multisearch on Lens, you can go beyond the search box and ask questions about what you see,” Google said in a blog post. The new feature is made possible by its latest advancements in artificial intelligence, which is “making it easier to understand the world around you in more natural and intuitive ways”. “We’re also exploring ways in which this feature might be enhanced by MUM — our latest AI model in Search — to improve results for all the questions you could imagine asking.”

Though it appears mostly aimed at shopping to start, a lot more is possible. “You could imagine you have something broken in front of you, don’t have the words to describe it, but you want to fix it... you can just type ‘how to fix,’” mentions the Google post. The company is hoping AI models can drive a new era of search where text is not enough.

But the new function may not work with something like the voice assistant because there are infinite possible requests. When it comes to putting more stress on picture or your text search, users have some control. While searching for a leafy dress that matches your notebook, you will have to tell Google to search for the pattern and not more notebooks. Though Lens works well but there are times when it displays a list of wrong item types. So the combination of images and text will help refine search.